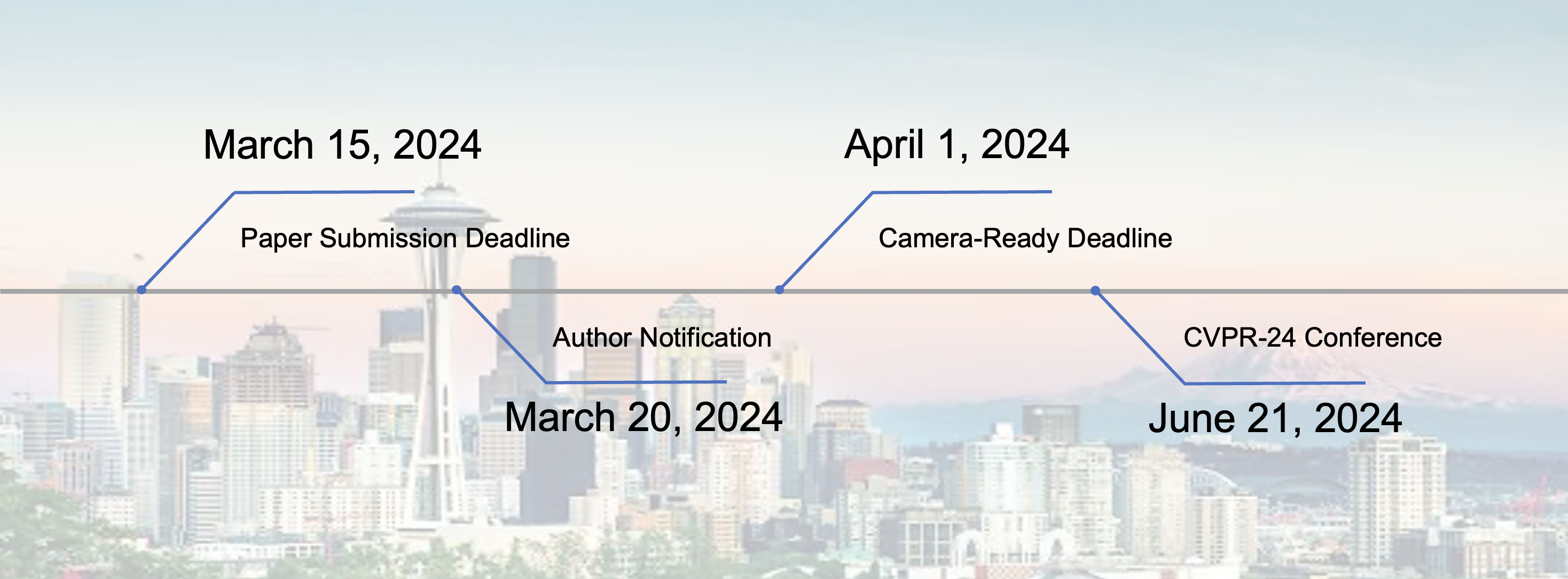

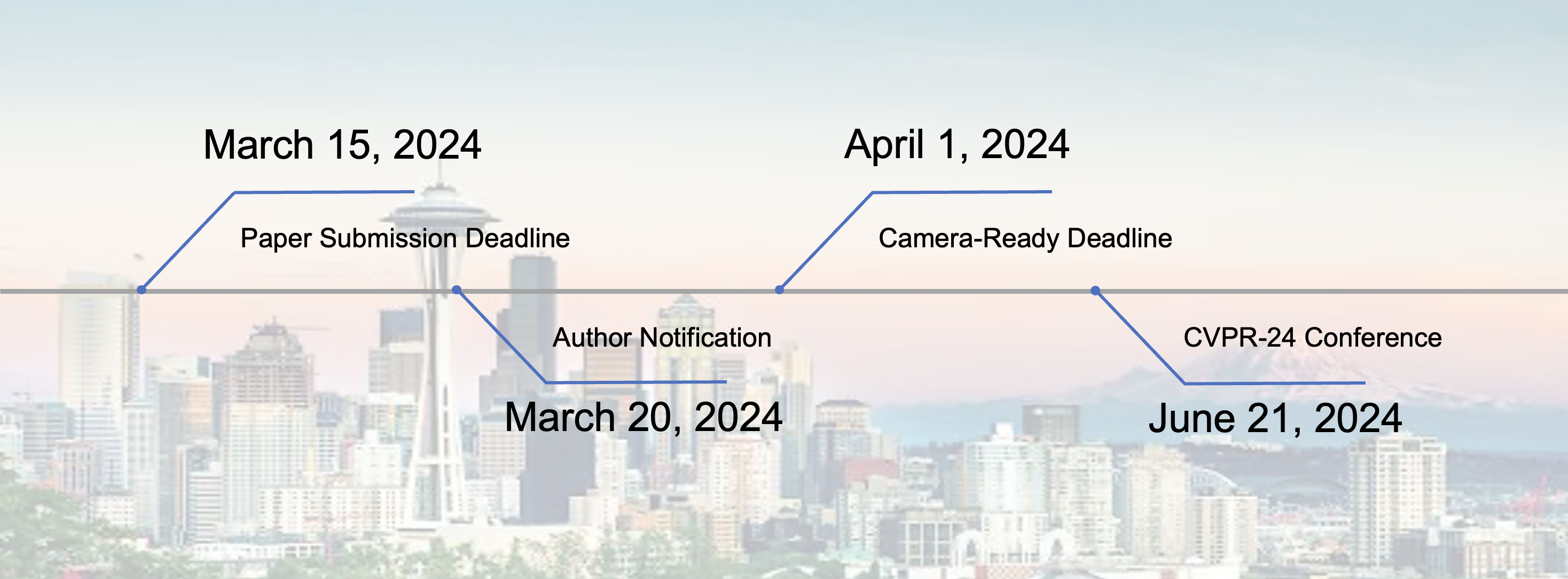

Tentative Important Dates

Artificial intelligence (AI) has entered a new era with the emergence of foundation models (FMs). These models demonstrate powerful generative capabilities by leveraging extensive model parameters and training data, which have become a dominant force in computer vision, revolutionizing a wide range of applications. Alongside their potential benefits, the increasing reliance on FMs has also exposed their vulnerabilities to adversarial attacks. These malicious attacks involve applying imperceptible perturbations to input images or prompts, which can cause the models to misclassify the objects or generate adversary-intended outputs. Such vulnerabilities pose significant risks in safety-critical applications, such as autonomous vehicles and medical diagnosis, where incorrect predictions can have dire consequences. By studying and addressing the robustness challenges associated with FMs, we could enable practitioners to better construct robust, reliable FMs across various domains.

The workshop will bring together researchers and practitioners from the computer vision and machine learning communities to explore the latest advances and challenges in adversarial machine learning, with a focus on the robustness of foundation models. The program will consist of invited talks by leading experts in the field, as well as contributed talks and poster sessions featuring the latest research. In addition, the workshop will also organize a challenge on adversarial attacking foundation models.

We believe this workshop will provide a unique opportunity for researchers and practitioners to exchange ideas, share latest developments, and collaborate on addressing the challenges associated with the robustness and security of foundation models. We expect that the workshop will generate insights and discussions that will help advance the field of adversarial machine learning and contribute to the development of more secure and robust foundation models for computer vision applications.

| Workshop Schedule | |||

| Event | Start time | End time | |

| Opening Remarks | 8:30 | 8:40 | |

| Challenge Session | 8:40 | 9:00 | |

| Invited talk #1: Prof. Chaowei Xiao | 9:00 | 9:30 | |

| Invited talk #2: Prof. Bo Li | 9:30 | 10:00 | |

| Invited Talk #3: Prof. Zico Kolter | 10:00 | 10:30 | |

| Invited Talk #4: Prof. Neil Gong | 10:30 | 11:00 | |

| Poster Session #1 | 11:00 | 12:30 | |

| Lunch (12:30-13:30) | |||

| Invited Talk #5: Prof. Ludwig Schmidt | 13:30 | 14:00 | |

| Invited Talk #6: Prof. FlorianTramer | 14:00 | 14:30 | |

| Invited Talk #7: Prof. Tom Goldstein | 14:30 | 15:00 | |

| Invited Talk #8: Prof. Alex Beutel | 15:00 | 15:30 | |

| Poster Session #2 | 15:30 | 16:30 | |

|

Bo

|

|

University of Chicago |

|

Tom

|

|

|

|

Ludwig

|

|

University of Washington |

|

Chaowei

|

|

University of Wisconsin, Madison |

|

Florian

|

|

ETH Zurich |

|

Alex

|

|

OpenAI |

|

Zico

|

|

Carnegie Mellon University |

|

Neil

|

|

Duke University |

|

Aishan

|

|

Beihang |

|

Jiakai

|

|

Zhongguancun |

|

Mo

|

|

Johns Hopkins |

|

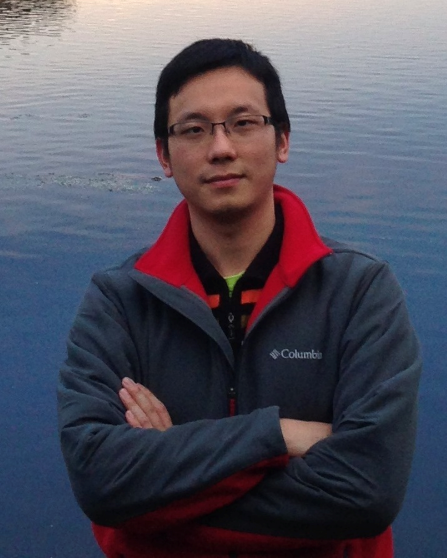

Qing

|

|

A*STAR |

|

Xiaoning

|

|

Monash |

|

Xinyun

|

|

Google Brain |

|

Felix

|

|

AI at Meta |

|

Xianglong

|

|

Beihang |

|

VishalM

|

|

Johns Hopkins University |

|

Dawn

|

|

UC Berkeley |

|

Alan

|

|

Johns Hopkins |

|

Philip

|

|

Oxford |

|

Dacheng

|

|

The University of Sydney |

Rank List

| Rank | Team |

| team_aoliao | |

| AdvAttacks | |

| team_Aikedaer | |

| 4 | daqiezi |

| 5 | team_theone |

| 6 | AttackAny |

| 7 | team_bingo |

| 8 | Ynu AI Sec |

| 9 | Tsinling |

| 10 | abandon |

Challenge Chair

|

Jiakai

|

|

Zhongguancun |

|

Zonglei

|

|

Beihang |

|

Hainan

|

|

Institute |

|

Zhenfei

|

|

Shanghai AI Laboratory & The University of Sydney |

|

Haotong

|

|

ETH |

|

Jing

|

|

Shanghai AI |

|

Xianglong

|

|

Beihang |

|

Shengshan

|

|

Huazhong University of Science and Technology |

|

Long

|

|

Huazhong University of Science and Technology |

|

Yanjun

|

|

Zhongguancun |

|

Yue

|

|

OpenI |

|

Yisong

|

|

Beihang |

|

Jin

|

|

Beihang |

|

Tianyuan

|

|

Beihang |

|

Siyang

|

|

Beihang |